I started Algorotoscope as an escape from the 2016 election. I wanted to learn more about machine learning and TensorFlow while simultaneously wanting to escape the world. Once I established a working model I wanted to get out in the world more to take photos and videos. I lost the Amazon Machine Image (AMI) for my Algorotoscope process as part of switching accounts, so I haven’t produced anything new in a while. I am just working from images I have already applied my models. Now I have my AMI and a newly optimized process for producing texture transfer models, but also applying them to large amounts of images. So, I am looking to continue my exploration, with an emphasis on being out in the world taking photos that speak to the characteristics of each ML model, applying to my new and old images, and telling stories that support them.

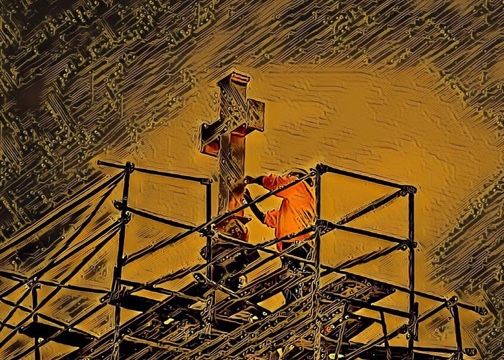

If I have to reaffirm why I do Algorotoscope. It is less about learning ML or escaping the world now. I’d say it is about exploring the world. Finding relevant images I can train models on and then going out in the world to take photographs and film videos that leverage the strengths of each of the models. Visually and contextually. I just don’t want to be a one trick pony with the dark models applied to every day images. I want to come at it from multiple dimensions, reflecting on both the physical and digital spaces we live in. The overlap of the two. The obfuscation. The reality distortion field that consumes us online, but then also colors, paints, and texturizes the actual world around us.

To understand where I am coming from, it helps to understand how I see the world around us. I see APIs. I see millions of digital interfaces distorting our worlds. Some recent examples can be found with Facebook, Twitter, and the 2016 election, or how our legal system is being automated with algorithms Human Resources for our companies are flowing through these pipes, deciding who they hire and who they don’t. I see our world flowing through APIs each day, and I see how existing biases are being codified in these pipes and gears that our powering not just our online lives, but our physical world. This is why I publish Algorotoscope images with each post you see on API Evangelist—it is a visual representation of the space I’m telling a story in. I need a steady stream of images that are relevant to how I am describing the knobs, levers, and gears of the machine, but also with some context of the bias being baked in.

Algorotoscope is how I show how I see the digital world consuming our physical worlds. Consuming, then dictating and shaping our lives. I don’t know how else to illustrate it. I am a software engineer, architect, and storyteller. I can’t draw this shit. I can only express it using ML and photographing—two things I cam capable of doing in this moment. The Algorotoscope images are the closest I can come to articulating what I am seeing distort and obfuscate our lives. It is the static in our heads after we’ve been online too long. It visualizes why we have a lack of control over our lives right now. It provides a quick snapshot of just one more moment where a moment of our lives is being reduced to a transaction.