A Man of Parts and Learning, by Fara Dabhoiwala on the portrait of Francis Williams is my favorite new Algorotoscope filters for revealing algorithmic bias across the technology which is consuming us each day. The story of this amazing man, as well as those who were threatened by him and worked so hard to obfuscate and disappear Francis Williams from history reflects the intent behind my Algorotoscope work. Making the resulting Tensorflow machine learning model trained on the Francis Williams portrait is perfect for casting a dark shadow on how the Internet and artificial intelligence is working to obfuscate and disappear black voices and lives today.

It isn’t just the direct efforts of people like Edward Long hundreds of years ago or Elon Musk working today to erase black excellence, but it is the mundane everyday bias of the art and history community to dismiss Francis William’s story and legacy. It is this institutional bias that I am looking to shine a light on using Algrotoscope to train and apply models to images that I share across all of my API storytelling. AI models are trained on the same bias we see baked into the system over 250+ years of art and history. AI models will codify all of the bias you read about this amazing story of the life of Francis Williams, and automate this bias.

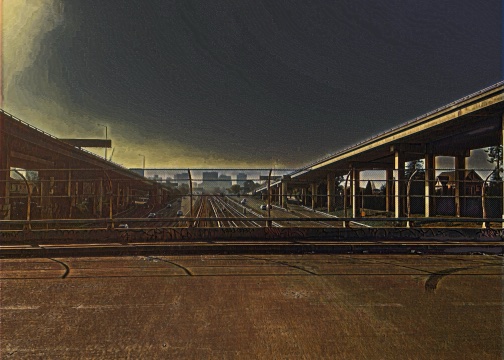

I have trained a Tensorflow texture transfer machine learning model on the portrait of Francis Williams. I then apply this model to photos I have taken over the years. I have chosen a mix of photos to highlight algorithmic bias, but few speak louder than the handful of photos taken from the [once prosperous black neighborhood of Oakland devastated by the installation of the 880 Freeway in the 1950s](https://www.oaklandca.gov/topics/oaklands-history-of-resistance-to-racism#:~:text=Highway%2017%20(now%20I%2D880rest%20of%20the%20Nimitz%20Freeway). These are the dark shadow of the Francis William portrait applied to the photos I took when I lived in this neighborhood.

This neighborhood was at the time a thriving black community that was deliberately targeted by white transit planners in the 40s, 50s, and 60s. It was something that forced out residents in the path of the freeway, but also began to force out successful white and black owned businesses over the next forty years, leaving the community everyone drives past today.

When you drive or walk around this neighborhood it is clearly under a severe strain, with the community disappeared by the speed in which you can drive on the freeway above. It is crazy to think about the multiple city blocks that were destroyed to produce this monstrosity of concrete infrastructure. Think about all of the lives that they destroyed so people could drive over the top of this neighborhood.

If by chance you find yourself getting off of one of the exits or living in the area like I did you get to experience the impacts of this type of infrastructure first-hand. As a white person you tend to just think, “why can’t these black people live better?”, but when you know the history you ask, “why did these white people crush these black people’s neighborhoods and lives?”. We know the answer to this-—infrastructural racism.

This is what artificial intelligence will continue to escalate in our virtual world. Similar to, but in different ways, algorithmic bias will continue to divide communities, then obfuscate and abstract away the incompetency and damage done to human beings, and specifically to black, brown, and other marginalized people. AI infrastructure will do the work of Edward Longs, but also of every art dealer and critic over the years. AI infrastructure will do the work of the white highway planners in California during the 1950s and 1960s, but also the work of every single person who has driven over Oakland on the 880 over the years and pretended to not see what was there.

Artificial intelligence is literally trained on the criminal records, municipal records, and property data of West Oakland. Artificial intelligence will perpetuate the same biases and violence included in Oakland, but the dark shadows of austerity created by the Internet. I absolutely love the story of Francis Williams’ intellect and life journey. I love helping ensure his story is told and lives on as part of history. I think the shadow of Francis William’s story comes through the AI filter applied by the ML model I used to steal from his portrait and apply to my photos. It provides me with a useful way to share Francis William’s story while using it as a cautionary tale for what is happening today with artificial intelligence—-helping better highlight bias in our physical and virtual worlds.

I will use this images, plus others I have applied this AI Model to in my storytelling on Kin Lane, Alternate, Kin Lane, and API Evangelist, inviting Francis William into my technology storytelling from within the machine.