Project Updates

A Man of Parts and Learning, by Fara Dabhoiwala on the portrait of Francis Williams is my favorite new Algorotoscope filters for revealing algorithmic bias across the technology which is consuming us each day. The story of this amazing man, as well as those who were threatened by him and worked so hard to obfuscate and disappear Francis Williams from history reflects the intent behind my Algor...

A Man of Parts and Learning, by Fara Dabhoiwala on the portrait of Francis Williams is my favorite new Algorotoscope filters for revealing algorithmic bias across the technology which is consuming us each day. The story of this amazing man, as well as those who were threatened by him and worked so hard to obfuscate and disappear Francis Williams from history reflects the intent behind my Algor...

I spent some time making some new images with my B.F. Skinner Algorotoscope models the other weekend. I am pretty happy with my pallet of AI models right now. I need to get them better displays don the site, but the tones of them represent many of the emotions I am trying to illuminate right now. However, in th mean time I am now exploring how I can better overlay images produced from different Algorotoscope models.

I spent some time making some new images with my B.F. Skinner Algorotoscope models the other weekend. I am pretty happy with my pallet of AI models right now. I need to get them better displays don the site, but the tones of them represent many of the emotions I am trying to illuminate right now. However, in th mean time I am now exploring how I can better overlay images produced from different Algorotoscope models.

I am not well. I have something systemic wrong with me. I am not responsible for its origins, but I am responsible for perpetuating it. It is a condition that is difficult to see despite being ubiquitous throughout our lives.

I am not well. I have something systemic wrong with me. I am not responsible for its origins, but I am responsible for perpetuating it. It is a condition that is difficult to see despite being ubiquitous throughout our lives.

I wish I could paint the pictures I have in my head from my work as the API Evangelist. The closest I can come is projecting light and transforming images as part of my Algorotoscope Work. Some day my painting skills may get me closer to what I see emerging around us, but for right now my Algorotoscope Work is the closest I got. I am just turning the knobs and dials on the Algorotoscope lenses I have created, until the reception gets better...

I wish I could paint the pictures I have in my head from my work as the API Evangelist. The closest I can come is projecting light and transforming images as part of my Algorotoscope Work. Some day my painting skills may get me closer to what I see emerging around us, but for right now my Algorotoscope Work is the closest I got. I am just turning the knobs and dials on the Algorotoscope lenses I have created, until the reception gets better...

I started Algorotoscope as an escape from the 2016 election. I wanted to learn more about machine learning and TensorFlow while simultaneously wanting to escape the world. Once I established a working model I wanted to get out in the world more to take photos and videos. I lost the Amazon Machine Image (AMI) for my Algorotoscope process as part of switching accounts, so I haven’t produced anything new in a while. I am just working from images I have already applied my models. Now I have my...

I started Algorotoscope as an escape from the 2016 election. I wanted to learn more about machine learning and TensorFlow while simultaneously wanting to escape the world. Once I established a working model I wanted to get out in the world more to take photos and videos. I lost the Amazon Machine Image (AMI) for my Algorotoscope process as part of switching accounts, so I haven’t produced anything new in a while. I am just working from images I have already applied my models. Now I have my...

I had an AWS machine image that had my texture transfer process all setup. I had my AWS account compromised and someone spun up servers across almost every AWS region—my bill was pushing $25K. Luckily it was due to anything I had done and AWS didn’t charge me for any of it. However, in the shuffle from that account to a new API Evangelist specific account, my AWS machine image got lost—forcing me to have to rebuild from scratch. It is always a daunting thing to dive back into the world of ...

I had an AWS machine image that had my texture transfer process all setup. I had my AWS account compromised and someone spun up servers across almost every AWS region—my bill was pushing $25K. Luckily it was due to anything I had done and AWS didn’t charge me for any of it. However, in the shuffle from that account to a new API Evangelist specific account, my AWS machine image got lost—forcing me to have to rebuild from scratch. It is always a daunting thing to dive back into the world of ...

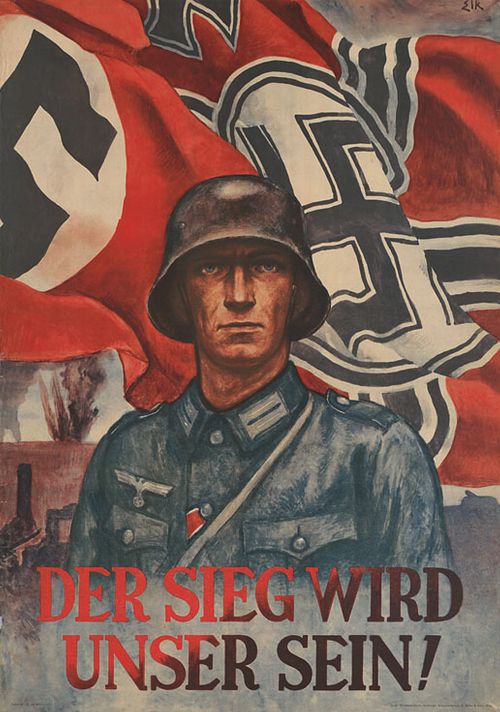

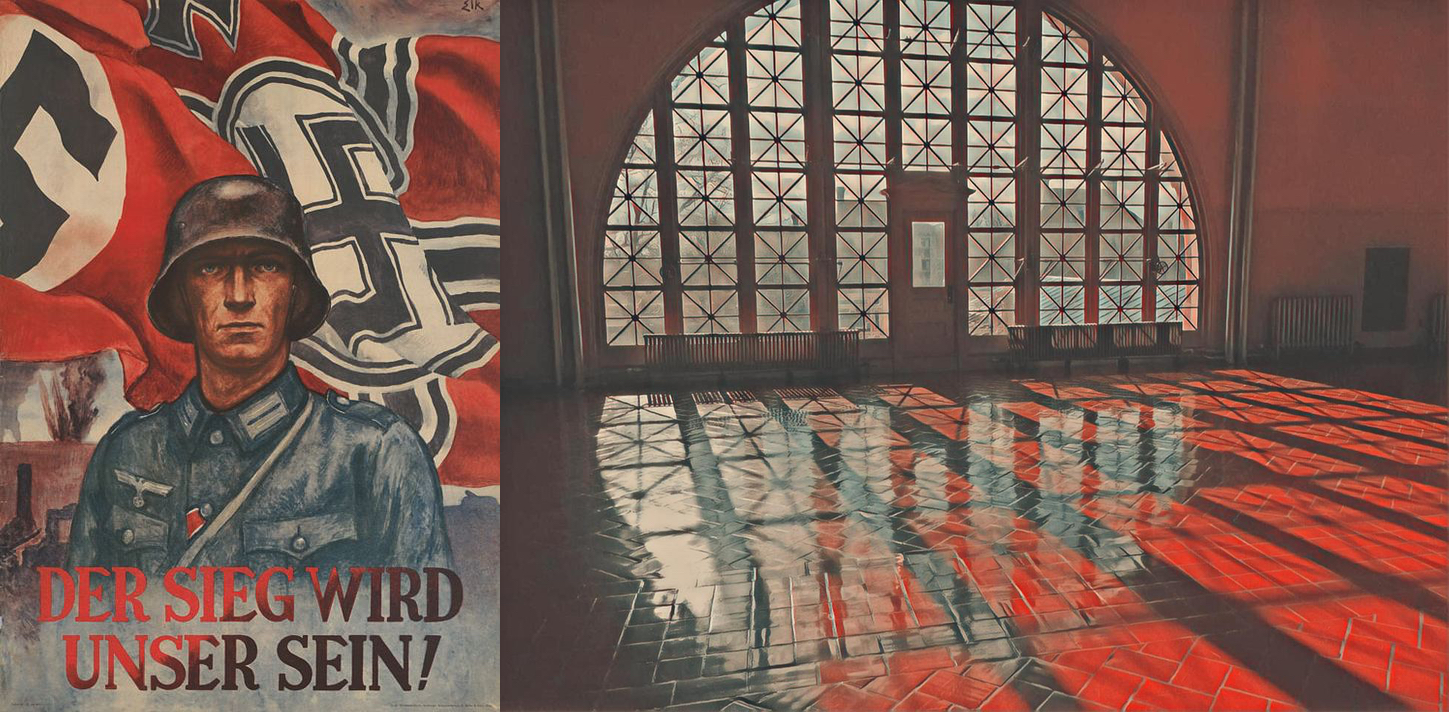

Historically the ML models I have trained for Algorotoscope have been fairly dark in nature. I had the training wheels of the models that came with the ML process that I adopted, but then I quickly began training on Salvador Dali and playing around with a distortion in time, but then found myself drowning in Nazi and Russian propaganda. I would take these models and apply them to normal everyday photos ...

Historically the ML models I have trained for Algorotoscope have been fairly dark in nature. I had the training wheels of the models that came with the ML process that I adopted, but then I quickly began training on Salvador Dali and playing around with a distortion in time, but then found myself drowning in Nazi and Russian propaganda. I would take these models and apply them to normal everyday photos ...

I have bounced around quite a bit from the original Tensorflow model I was using when I started this work. Algorithmia had done a lot of the heavy lifting for me, but that original Amazon Web Services machine image eventually fell into disrepair and I couldn’t operate it anymore. For the last couple of years I bounced around trying different text transfer services, and kicking the tires on a variety of Tensorflow models that were cheaper to operate, but none of them produced the same resul...

I have bounced around quite a bit from the original Tensorflow model I was using when I started this work. Algorithmia had done a lot of the heavy lifting for me, but that original Amazon Web Services machine image eventually fell into disrepair and I couldn’t operate it anymore. For the last couple of years I bounced around trying different text transfer services, and kicking the tires on a variety of Tensorflow models that were cheaper to operate, but none of them produced the same resul...

After organizing a couple of the latest batches of images I produced as part of my algorotoscope work I wanted to step back and see what I had made. I wanted to get better at organizing some of the more interesting images I had produced using specific Tensorflow machine learning models. Much of my work is pretty random without much connection between the image I trained my models on and the images I applied my models to–they are just making for some interesting colors and textures. However...

After organizing a couple of the latest batches of images I produced as part of my algorotoscope work I wanted to step back and see what I had made. I wanted to get better at organizing some of the more interesting images I had produced using specific Tensorflow machine learning models. Much of my work is pretty random without much connection between the image I trained my models on and the images I applied my models to–they are just making for some interesting colors and textures. However...

I’ve been playing with different ways of visualizing the impact that algorithms are making on our lives. How they are being used to distort the immigration debate, and how the current administration is being influenced and p0wned by Russian propaganda. I find shedding light o...

I’ve been playing with different ways of visualizing the impact that algorithms are making on our lives. How they are being used to distort the immigration debate, and how the current administration is being influenced and p0wned by Russian propaganda. I find shedding light o...

I'm spending time on my algorithmic rotoscope work, and thinking about how the machine learning style textures I've been marking can be put to use. I'm trying to see things from different vantage points and develop a better understanding of how texture styles can be put to use in the regular world.

I am enjoying using image style filters in my writing. It gives me kind of a gamified layer to my photography and drone hobby that allows ...

I'm spending time on my algorithmic rotoscope work, and thinking about how the machine learning style textures I've been marking can be put to use. I'm trying to see things from different vantage points and develop a better understanding of how texture styles can be put to use in the regular world.

I am enjoying using image style filters in my writing. It gives me kind of a gamified layer to my photography and drone hobby that allows ...

I am having a difficult time reconciling what is going on with the White House right now. The distortion field around the administration right now feels like some bad acid trip from the 1980s, before I learned how to find the good LSD. After losing their shit over her emails and Benghazi, they are willing to overlook Russi...

I am having a difficult time reconciling what is going on with the White House right now. The distortion field around the administration right now feels like some bad acid trip from the 1980s, before I learned how to find the good LSD. After losing their shit over her emails and Benghazi, they are willing to overlook Russi...

We are increasingly looking through an algorithmic lens when it comes to politics in our everyday lives. I spend a significant portion of my days trying to understand how algorithms are being used to shift how we view and discuss politics. One of the ongoing themes in my research is focused on machine learning, which is an aspect...

We are increasingly looking through an algorithmic lens when it comes to politics in our everyday lives. I spend a significant portion of my days trying to understand how algorithms are being used to shift how we view and discuss politics. One of the ongoing themes in my research is focused on machine learning, which is an aspect...

I'm thinking about my digital bits a lot lately. Thinking about the digital bits that I create, the bits I generate automatically, the bits I own, the bits I do not own, and how I can make a living with just a handful of my bits. I have an inbox full of people who want me to put my bits on their websites, and people who want to put their bits on my platform so that they are associated...

I'm thinking about my digital bits a lot lately. Thinking about the digital bits that I create, the bits I generate automatically, the bits I own, the bits I do not own, and how I can make a living with just a handful of my bits. I have an inbox full of people who want me to put my bits on their websites, and people who want to put their bits on my platform so that they are associated...

I've been working with Algorithmia to manage a large number of images as part of my algorithmic rotoscope side project, and they have a really nice omni-platform approach to allowing me to manage my images and other files I am using in my machine learning workflows. Images, files, and the input and output of heavy object is an essential part of almost any machine learning task, and Algorithmia makes easy to...

I've been working with Algorithmia to manage a large number of images as part of my algorithmic rotoscope side project, and they have a really nice omni-platform approach to allowing me to manage my images and other files I am using in my machine learning workflows. Images, files, and the input and output of heavy object is an essential part of almost any machine learning task, and Algorithmia makes easy to...

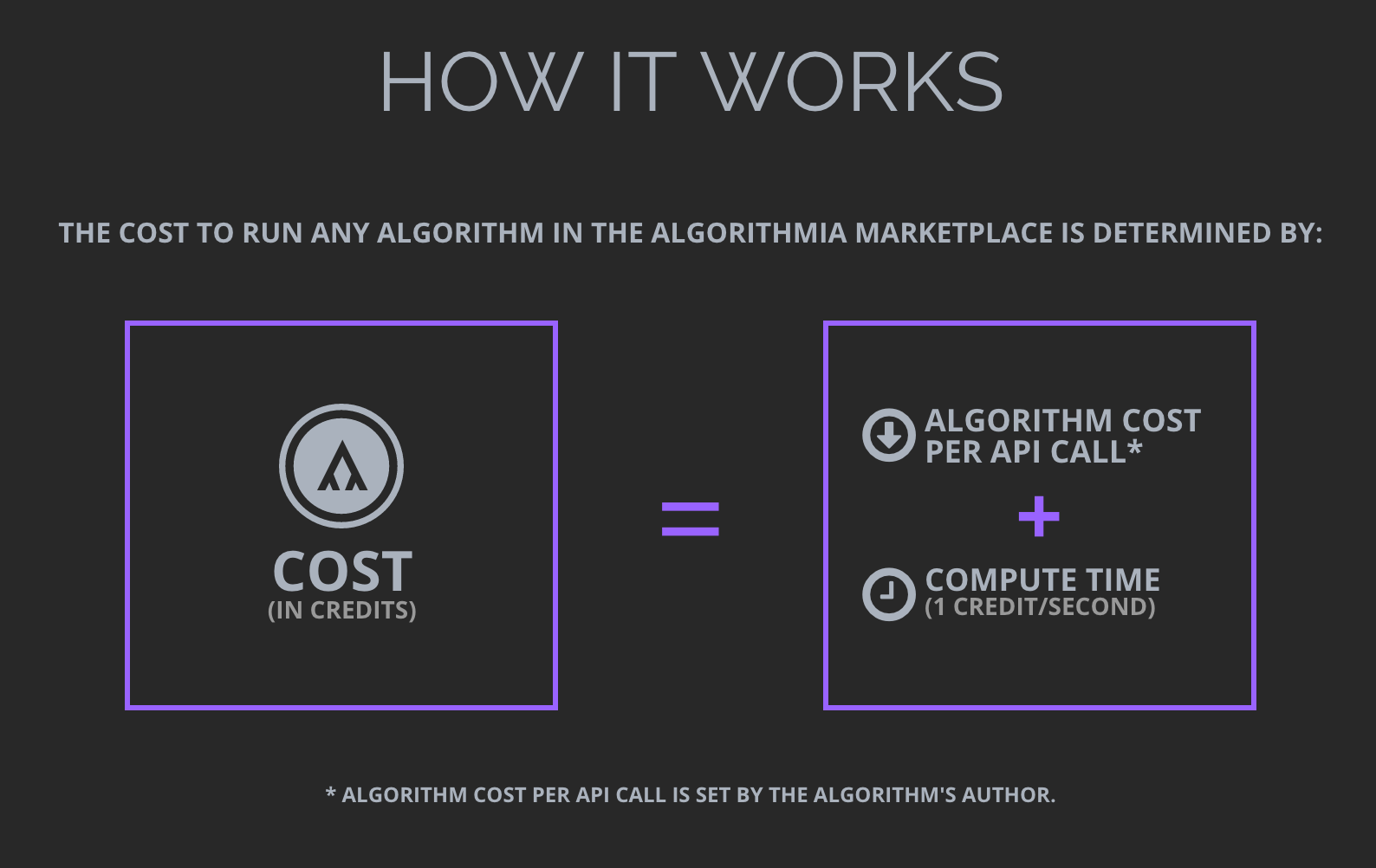

I got sucked into a month long project applying machine learning filters to video over the holidays. The project began with me doing the research on the economics behind Algorithmia's machine learning...

I got sucked into a month long project applying machine learning filters to video over the holidays. The project began with me doing the research on the economics behind Algorithmia's machine learning...

I was playing around with Algorithmia for a story about their business model back in December, when I got sucked into playing with their DeepFilter ser...

I was playing around with Algorithmia for a story about their business model back in December, when I got sucked into playing with their DeepFilter ser...

I had come across Texture Networks: Feed-forward Synthesis of Textures and Stylized Image from Cornell University a while back in my regular monitoring of the API space, so I was pleased to see Algorithmia building on this work with their Deep Filter project. The artistic styles and textures you can apply to images is fun to play with, and even more fun when you apply at...

I had come across Texture Networks: Feed-forward Synthesis of Textures and Stylized Image from Cornell University a while back in my regular monitoring of the API space, so I was pleased to see Algorithmia building on this work with their Deep Filter project. The artistic styles and textures you can apply to images is fun to play with, and even more fun when you apply at...

I putting some thought into some next steps for my algorithmic rotoscope work, which is about the training and applying of image style transfer machine learning models. I'm talking with Jason Toy (@jtoy) over at

I putting some thought into some next steps for my algorithmic rotoscope work, which is about the training and applying of image style transfer machine learning models. I'm talking with Jason Toy (@jtoy) over at